You have unlimited access as a PRO member

You are receiving a free preview of 3 lessons

Your free preview as expired - please upgrade to PRO

Contents

Recent Posts

- Object Oriented Programming With TypeScript

- Angular Elements Advanced Techniques

- TypeScript - the Basics

- The Real State of JavaScript 2018

- Cloud Scheduler for Firebase Functions

- Testing Firestore Security Rules With the Emulator

- How to Use Git and Github

- Infinite Virtual Scroll With the Angular CDK

- Build a Group Chat With Firestore

- Async Await Pro Tips

Google Assistant Developer Quick Start

Episode 105 written by Jeff Delaney

Natural language processing is getting scary good - just watch the Google I/O 2018 keynote to see what I mean. In this lesson, my goal is to teach you how to start using the Google Assistant to build dynamic conversational apps for your business.

Full source code Google Assistant Cloud Function demo.

How to Build Google Assistant Apps

There are three main ways to build a Google Assistant app - (1) use a template, (2) use Dialogflow, or (3) use the Actions SDK.

Dialogflow (recommended) is a high-level tool for handling natural language processing and it’s a real joy to work with. We have used it to build chatbots for Slack in the past and it wraps the Actions SDK to build your app with minimal configuration code.

Actions SDK allows you to create are entry points, or Actions that can be invoked by a user, then handled in a backed environment. It provides the most control, but requires you to generate all of the messages from scratch in your backed.

Templates are nice if they fit your needs and currently support the following use-cases: Trivia Games, Flash Cards, and Quizes. If that’s what you’re building then great! Just use a template and you’re done.

Technical Conversation Breakdown

Here’s how a conversation works step-by-step from a technical perspective:

User: “Hey Google, let’s talk to AngularFirebase”

Google finds the app based on name the app developer registered.

Assistant: “Ok, getting the test version of AngularFirebase”

Once found, it will trigger the Default Welcome Intent in Dialogflow. You are now in a Dialogflow session with the agent.

App: “Howdy, AngularFirebase here. How can I help you?

At this point, the app is waiting for the user to trigger an intent in Dialogflow by saying something. It’s a good practice to have your agent reply with helpful hints about they types of intents are available, i.e. Welcome! Say something like place order or find reservation.

User: “What was the last episode?”

The user triggers the Get Last Episode intent, which is fulfilled by a Firebase Cloud Function.

App: “The last episode was 104 about building cool stuff…” End

The Firebase Cloud Function reaches out to the website to scrape the necessary information. It then uses the actions-by-google NodeJS library to send a response that can be read by the Google Assistant.

Once this intent is fulfilled, the conversation is closed, but you the developer can keep it going as long as needed.

Build a Dynamic Assistant with Dialogflow and Firebase

In this section, we will build a Google Assistant app using Dialogflow to control the conversation dynamics, then a Firebase Cloud Function will fulfill requests that require data from the outside world. So what will our assistant do? It will scrape the AngularFirebase.com lesson feed for the last episode and tell the end user its title.

Design the Conversation in Dialogflow

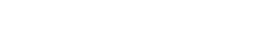

Go to the Dialogflow dashboard and create an Agent and give it a new intent named Get Latest Episode.

Now train it to recognize a few phrases.

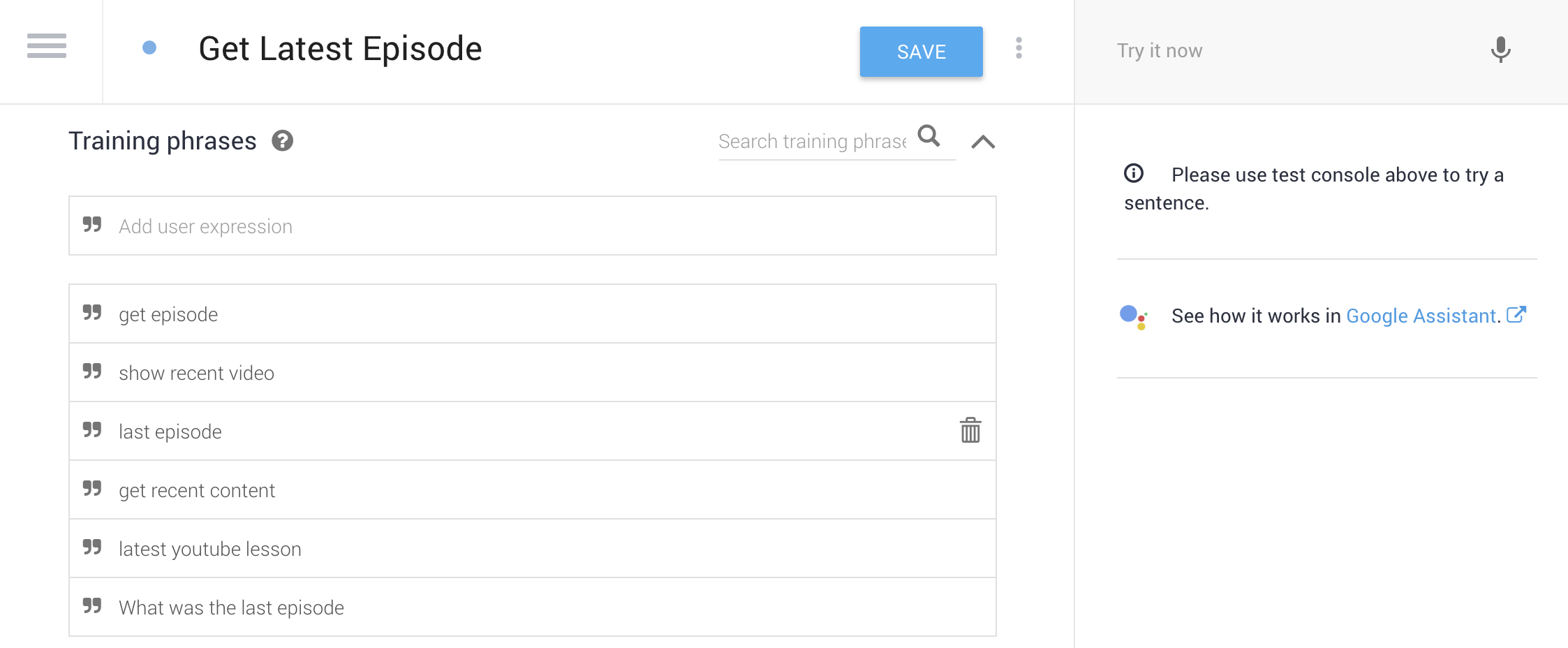

Next, configure the intent to fulfil requests with a webhook.

Initialize Firebase Cloud Functions

First, install [Firebase CLI Tools](https://github.com/firebase/firebase-tools.

npm install firebase-tools -g |

Then create an empty directory and initialize functions using TypeScript.

mkdir myAssistant |

Lastly, we will add the Actions on Google package, which also provides everything we need to work with Dialogflow.

npm install actions-on-google --save |

Our initial function imports in index.ts look like this:

import * as functions from 'firebase-functions'; |

Scraping the Web for Content

The beauty of a cloud function is that we can do anything one would expect in an NodeJS backend. In this case, we will build a simple web scraper can parse data from a webpage and format it as text that the assistant can say to the end user.

npm install node-fetch cheerio --save |

Let’s use node-fetch to make HTTP requests and Cheerio to grab DOM elements like we would with jQuery. This makes working with webpages in Node feel just like the browser.

async function scrapePage() { |

Sweet, we just build a web scraper with just a few simple lines of code.

Respond to Dialogflow with Rich Content

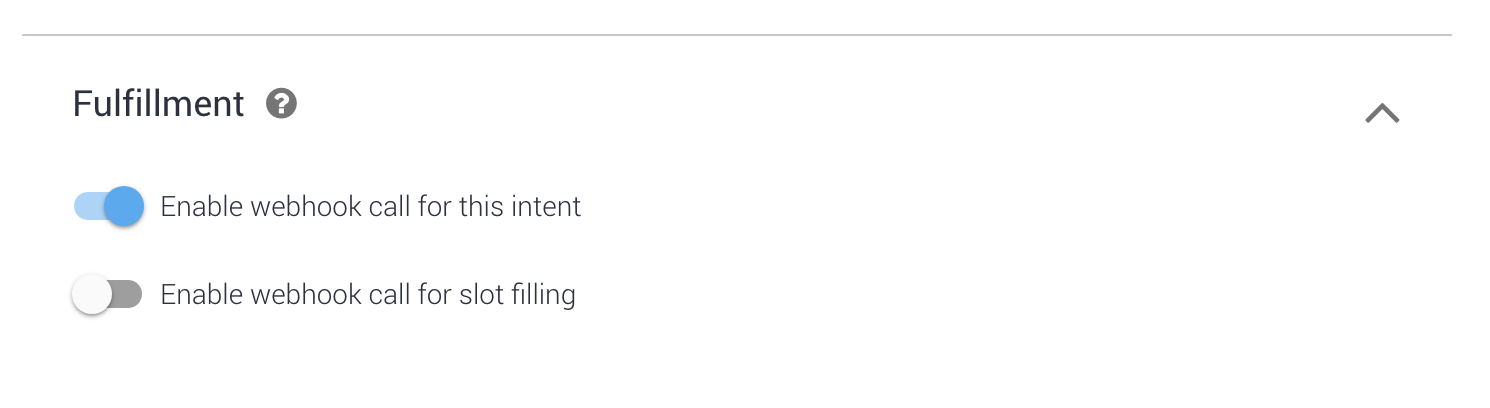

Google Assistant can respond with more than just simple text or speech. We can provide cards that contain images and deep links to deliver a more engaging experience to the end user.

A dialog flow is very similar to ExpressJS conceptually. We listen for an intent or action to be triggered and handle it.

app.intent('Some Intent', (conv) => { |

But we often want more fine-grained control than this. Let’s customize the way our assistant responds based on a device’s text, speech, or multimedia capabilities.

app.intent('Get Latest Episode', async (conv) => { |

Now we get this nice card response when the intent is invoked on mobile device or tablet.

Deploying the Assistant

An assistant is app is deployed in a similar to manner to an Android app on the Google Play Store. You fill out a bunch of information about the app, then have the option for an Alpha, Beta, or Production release.

For testing, however, you can already start using app on your own connected that are linked to your Gmail account. Just say Hey Google, talk to your-app-name.