You have unlimited access as a PRO member

You are receiving a free preview of 3 lessons

Your free preview as expired - please upgrade to PRO

Contents

Recent Posts

- Object Oriented Programming With TypeScript

- Angular Elements Advanced Techniques

- TypeScript - the Basics

- The Real State of JavaScript 2018

- Cloud Scheduler for Firebase Functions

- Testing Firestore Security Rules With the Emulator

- How to Use Git and Github

- Infinite Virtual Scroll With the Angular CDK

- Build a Group Chat With Firestore

- Async Await Pro Tips

Advanced Cloud Vision OCR Text Extraction

Episode 84 written by Jeff Delaney

In this lesson, we will expand on the Cloud Vision API concepts introduced in the Ionic SeeFood app from the last lesson. This time around we will perform text extraction (and a few other tricks) from a raw image. This is a highly sought after feature in business applications that still work with non-digitized text documents.

The Cloud Vision Node.js documentation is a good reference to keep by your side.

The Firebase Cloud Function in this lesson will work with any frontend framework. Just upload a file to the bucket and let Cloud Vision work its magic.

Getting Started

You actually don’t need a frontend app to experiment with this technology - just a Firebase storage bucket. I recommend creating a dedicated bucket for the invocation of cloud functions.

If you’re new to functions, you can generate your backend code with the following command:

firebase init functions |

Google Cloud Vision Advanced Techniques

We’re going to explore some a few of the more advanced features in Google Cloud Vision and talk about how you might use them to build an engaging UX. My goal is to give you some inspiration that you can use when building your next app.

Image Text Extraction

Optical Character Recognition, or OCR, is optimized by Google’s deep learning algorithms and made available in the API. The response is an array of objects, each containing a piece of extracted text.

The first element in the response array contains the fully parsed text. In most cases, this is all you need, but you can also get the position of each individual word. It also responds with a bounding box for each object, allowing you to determine where exactly this text appears in the image.

Inspiration - Build an image-to-text tool as a PWA.

Facial Detection

Facial detection, not to be confused with facial recognition, is used to extract data from the people(faces) present in an image.

The response includes the exact position of every facial feature (eyebrows, nose, etc), which makes it especially useful for positioning an overlay image. Imagine you are an apparel company and you want users to upload an image, then try on different hats or glasses. The Vision API would allow you to position the overlay precisely without much effort.

Inspiration - Build an app the measures the overall mood of your family photos.

Web Detection

Web detection is perhaps the most interesting tool in the Google Vision API. It allows you to tap into Google Image Search from a raw image file, opening the possibility to:

- Find websites using similar images

- Find exact image matches

- Find partial image matches

- Label web entities (similar to image tagging)

Inspiration - Build an app that checks for image copyright infringement or create your own image content discovery engine.

Vision API with Cloud Function

Now that we have learned how to handle data from the Vision API, we need to write some server side code to make the actual request.

Firebase Cloud Functions are just a Node.js environment, so we can use the Vision API client library. It is also possible to make requests to the REST API, but why make life more complicated?

index.ts

The cloud function is written in TypeScript, but could easily be modified for vanilla JS (just change the import statements to the require syntax). The first step is to initialize the Vision client and point to the specified bucket. From there, we will make several different requests to Cloud Vision to perform our desired tasks.

import * as functions from 'firebase-functions'; |

import * as functions from 'firebase-functions'; |

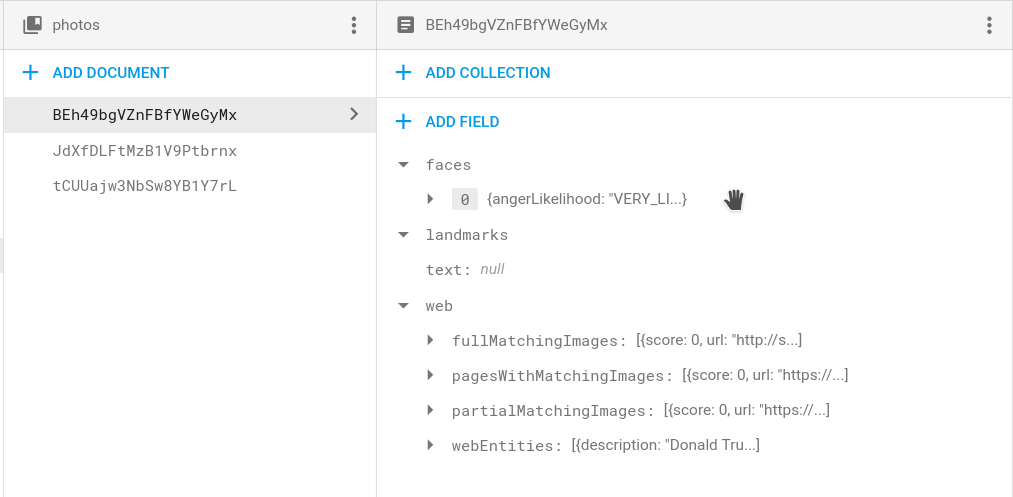

Showing the Data in the Frontend

Our cloud function saves the response from the function in the Firestore Database. Simply listen to the corresponding document and display its properties as needed. In this example, we unwrap the object observable and display various properties in the UI.

<div *ngIf="result$ | async as result"> |

The End

Cloud vision with deep learning is a rapidly evolving technology and is putting new opportunities in the hands of creative developers. Hopefully this tutorial gives you some inspiration for building next-gen features into your app.