You have unlimited access as a PRO member

You are receiving a free preview of 3 lessons

Your free preview as expired - please upgrade to PRO

Recent Posts

- Object Oriented Programming With TypeScript

- Angular Elements Advanced Techniques

- TypeScript - the Basics

- The Real State of JavaScript 2018

- Cloud Scheduler for Firebase Functions

- Testing Firestore Security Rules With the Emulator

- How to Use Git and Github

- Infinite Virtual Scroll With the Angular CDK

- Build a Group Chat With Firestore

- Async Await Pro Tips

Google Cloud Vision With Ionic and Firebase - Not Hotdog App

Episode 83 written by Jeff Delaney

In this lesson, we’re going to combine Google’s Google’s Cloud Vision API with Ionic and Firebase to create a native mobile app that can automatically label and tag images. But most importantly, it can determine if an image is a hotdog or not - just like the SeeFood app that made Jin Yang very rich.

Just a few years ago, this technology would have been unreachable by the average developer. You would need to train your own deep neural network on tens-of-thousands of images with massive amounts of computing power. Today, you can extract all sorts of data from images with the Cloud Vision API, such as facial detection, text extraction, landmark identification, and more.

Although the premise is silly, this app is no joke. You will learn how to implement very powerful deep learning image analysis features that can be modified to perform highly useful tasks based on AI computer vision.

Full source code for Ionic Not Hotdog Demo.

SeeFood App Design

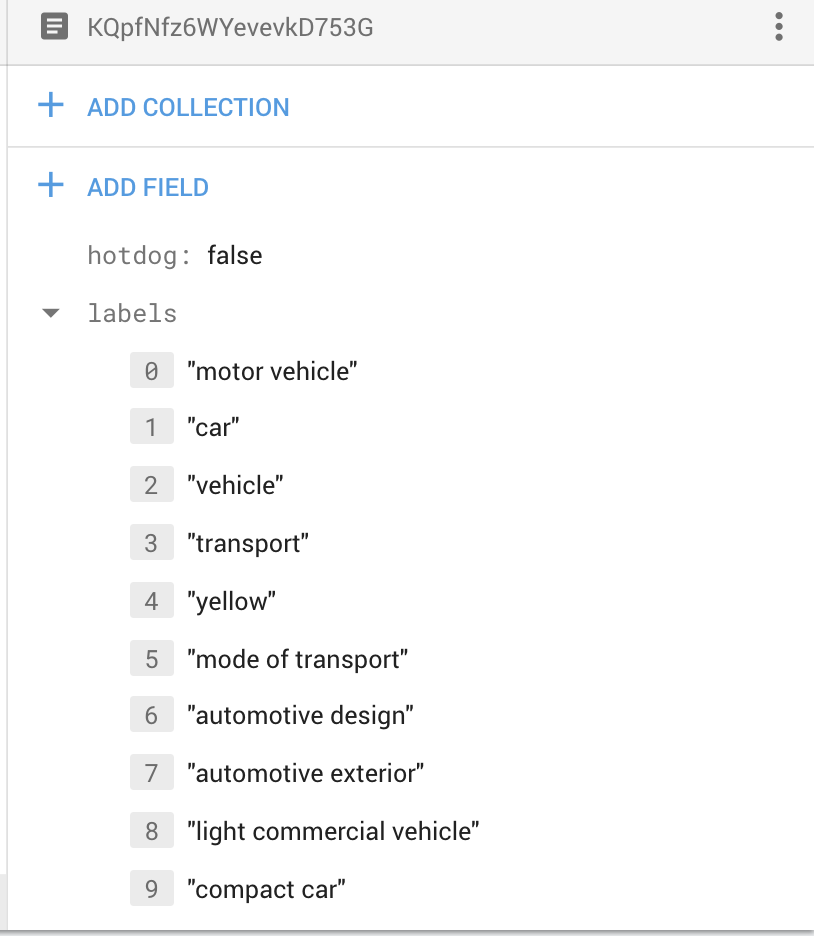

Not only will we determine if an object is a hotdog, but we will also show all 10 labels that Cloud Vision detects. The entire process can be broken down into the following steps.

- User uploads an image to Firebase storage via AngularFire2 in Ionic.

- The upload triggers a storage cloud function.

- The cloud function sends the image to the Cloud Vision API, then saves the results in Firestore.

- The result is updated in realtime in the Ionic UI.

Initial Setup

You are going to be amazed at how easy this app is to build. In just a few minutes, we go from zero to a native mobile app powered by cutting edge machine learning technology.

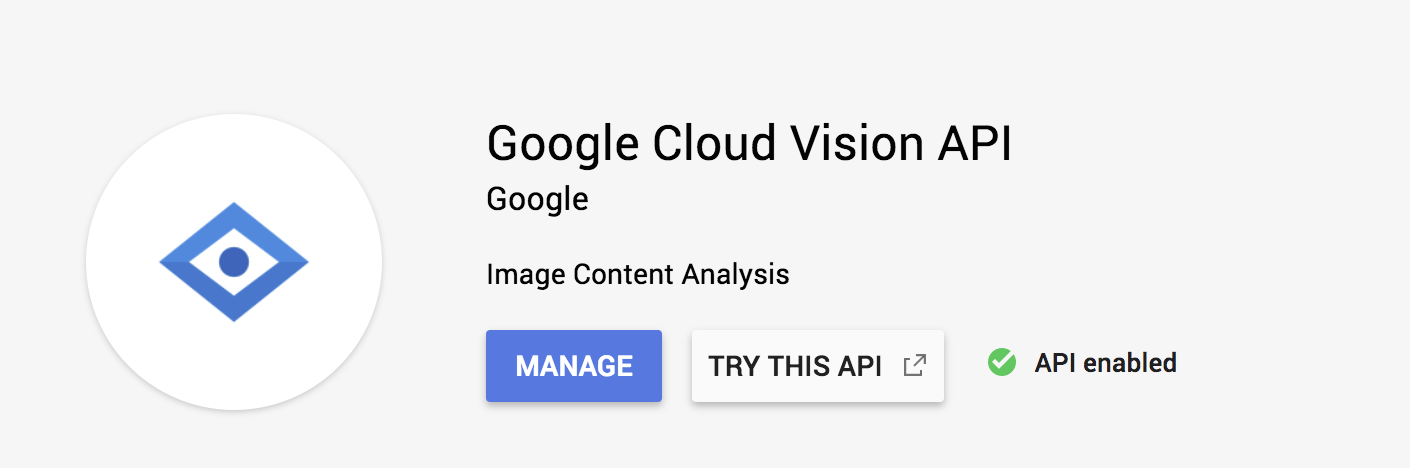

Create a Firebase Project and Enable Cloud Vision

This goes without saying, but you need a Firebase project. Every Firebase project just a Google Cloud Platform project under the hood, from which we can enable additional APIs as needed. Start by installing Firebase CLI tools.

npm install firebase-tools -g |

The Cloud Vision API is not enabled by default. From the GCP console, navigate the the enable APIs and enable the vision API.

Initialize an Ionic Project with AngularFire

At this point, let’s generate a new Ionic app using the blank template.

ionic start visionApp blank |

Emulating an iOS or Android Device

This tutorial uses the Ionic cordova plugin that will only work on native mobile devices.

Modifying this code to work with a progressive web app is pretty easy. The cloud function is identical, you just need a different method for collecting files from the user. Luckily, I showed you how to upload images from a PWA.

Google Vision Cloud Function

We’re going to use a storage cloud function trigger to send the file off to the Cloud Vision API. After the file upload is complete, we just need to pass the stored file’s bucket URI (looks like gs://<bucket>/<file>) to the Cloud Vision, which responds with an object of labels about the image.

After we receive this response, it will be formatted to an array of labels and saved in Firestore NoSQL database to be consumed by the end user in Ionic.

Pro Tip - Firebase storage functions cannot be scoped to specific files or folders. I recommend creating an isolated bucket for any file uploads that trigger functions.

Initialize Cloud Functions in Ionic

Make sure that you’re using TypeScript in your functions environment. Here are the steps if starting from scratch

firebase init functions |

index.ts

Our Cloud Function is async, which just means that it should return a Promise. Here’s a step by step breakdown of how it works.

- User uploads photo, automatically invoking the function.

- We format the image URI and send it to th Cloud Vision API.

- Cloud Vision responds with data about the image

- We format and save this data into the Firestore database.

The user will be subscribed to this data in the Ionic frontend, making the labels magically appear on their device when the function is complete.

import * as functions from 'firebase-functions'; |

The end result is a firestore document that looks something like this:

Ionic App

We’re starting from a brand new Ionic app with the blank template. We really only need one component to make this app possible. Make sure you’re back in the Ionic root directory and generate a new page.

ionic g page vision |

Then inside the app.component.ts, use the vision page as your Ionic root page.

import { VisionPage } from '../pages/vision/vision'; |

Installing AngularFire2 and Dependencies

Let’s add some dependencies to our Ionic project

npm install angularfire2 firebase --save |

At this point we need to register AngularFire2 and the native camera plugin in the app.module.ts. My full app module looks like this (make sure to add your own Firebase project credentials):

import { MyApp } from './app.component'; |

Uploading Files in Ionic with AngularFire2

There’s quite a bit going on in the component, so let me break this down step by step.

- The user clicks

uploadAndCapture()to bring up the device camera. - The camera returns the image as a Base64 string.

- We generate an ID that is used for both the image filename and the firestore document ID.

- We listen to this location in Firestore.

- An upload task is created to transfer the file to storage.

- We wait for the cloud function to update Firestore.

- Done.

import { Component } from '@angular/core'; |

Vision Page HTML

We need a button to open the user’s camera and start the upload.

<button ion-button icon-start (tap)="captureAndUpload()"> |

Then we need to unwrap the results observable. If hotdog === true, then we know we’ve got ourselves a hotdog and can update the UI accordingly. In addition, we loop over the labels to display an ionic chip for each one.

<ion-col *ngIf="result$ | async as result"> |

Next Steps

I see a huge amount of potential for mobile app developers with the Cloud Vision API. There are many business applications that can be improved with AI vision analysis, which I plan on covering in a future advanced lesson where we dive deeper into the API’s full capabilities.